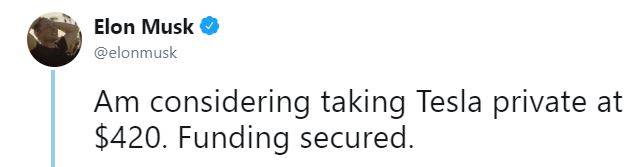

I follow both Elon Musk and Bored Elon Musk on Twitter. Sometimes when I scroll through my timeline I see a tweet from Elon Musk and think, "that's crazy talk". Other times I read a tweet from Bored Elon Musk and think, "this is so crazy it might work".

I use the tweepy library to grab tweets for Elon Musk (https://twitter.com/elonmusk) and Bored Elon Musk (https://twitter.com/BoredElonMusk) via the Twitter API. I will then use some classification algorithms to classify the tweets as real or fake. For now I just use a Multinomial Naive Bayes classifier. My theory is that it shouldn't be that easy for the algorithm to identify real Elon Musk tweets. I expect more false classifications and a lower accuracy score than is "acceptable" when we actually want to predict things well.

The first step is to create an app on the Twitter Developer platform in order to generate consumer and access keys. These will allow you to access the Twitter API through tweepy. These keys are specific to your Twitter Developer and/or user account, and so they should be kept private. I generally like to put them in a config.py file and import them. If you put your code on github, you can create a config_example.py file which will show other developers how to set up their config.py file. Also, make sure to add config.py to your .gitignore file.

from config import consumer_key, consumer_secret, access_token, access_token_secret

import tweepy

%matplotlib inline

import matplotlib.pyplot as plt

import datetime

import numpy as np

import pandas as pd

import seaborn as sns; sns.set()

# Creating the authentication object

auth = tweepy.OAuthHandler(consumer_key, consumer_secret)

# Setting your access token and secret

auth.set_access_token(access_token, access_token_secret)

# Creating the API object while passing in auth information

api = tweepy.API(auth)

Function to grab a user's tweets¶

Don't you love when you can write a function to keep your code DRY? I know I do.

def get_tweets(listOfTweets, user, numOfTweets):

# Iterate through all tweets of specific user

for tweet in tweepy.Cursor(api.user_timeline, id=user).items(numOfTweets):

# Add tweets in this format

dict_ = {'Screen Name': tweet.user.screen_name,

'Tweet Created At': pd.to_datetime(tweet.created_at),

'Tweet Text': tweet.text,

}

listOfTweets.append(dict_)

Grab tweets from both accounts¶

I decided to get 1000 tweets from each account. Ideally I would want more but both of these accounts don't tweet as much as, say, Donald Trump. I've listed 5 tweets from each account below. A lot of the real Elon Musk's tweets are replies or retweets. Those will be taken care of when I clean up the text.

data = []

get_tweets(data, 'BoredElonMusk', 1000)

get_tweets(data, 'elonmusk', 1000)

data_df = pd.DataFrame(data)

data_df.head()

data_df.tail()

Cleaning tweet text¶

Tweets can be alphabet soup. Luckily, there are ways to clean up the text using regular expressions (my favourite!!!) and Beautiful Soup. I'm looking to clean up by doing the following:

- Remove mentions/replies ("@") and retweets ("RT")

- Convert HTML encoding to characters ("&" to "&")

- Remove hyperlinks

- Remove foreign languages (I saw some Russian???)

- Remove hashtags and numbers

Shout out to this post for giving me this list.

data_df['tweet_cleaned'] = data_df['Tweet Text']

pat1 ='RT @[\w_]+: '

pat2 = r'@[A-Za-z0-9]+'

pat3 = r'https?://[A-Za-z0-9./]+'

pat4 = r'[^a-zA-Z ]+'

combined_pat = r'|'.join((pat1, pat2, pat4))

from bs4 import BeautifulSoup

data_df['tweet_cleaned'] = data_df.tweet_cleaned.str.replace(pat3, '')

data_df['tweet_cleaned'] = [BeautifulSoup(text, 'lxml').get_text() for text in data_df['tweet_cleaned'] ]

data_df['tweet_cleaned'] = data_df.tweet_cleaned.str.replace(combined_pat, '')

data_df.head()

Create training and test data sets and then create text features¶

What would we do without scikit-learn? I use the lovely train_test_split function to split my data into training and test samples. The training data has 1500 tweets while the test data has 500 tweets. I also use the feature_extraction module to get word counts for each tweet, and convert the word counts into TF-IDF (term frequency-inverse document frequency) space. I also make use of pipelines so I can apply transformations and estimate the model all at once.

from sklearn.model_selection import train_test_split

X = data_df['tweet_cleaned']

y = data_df['Screen Name']

Xtrain, Xtest, ytrain, ytest = train_test_split(X, y, random_state=1991)

# convert content of string into vector of numbers using TF-IDF

# use pipeline to attach vectorized text data to multinomial bayes classifier

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.naive_bayes import MultinomialNB

from sklearn.pipeline import make_pipeline

model = make_pipeline(TfidfVectorizer(), MultinomialNB())

# apply model to training data and predict labels for test data

model.fit(Xtrain, ytrain)

ypred = model.predict(Xtest)

# need to see how the classifier orders the classes so I can use them for the confusion matrix

labels = model.classes_

labels.tolist()

Model evaluation: confusion matrix and accuracy score¶

The confusion matrix shows the number of correct and incorrect predictions on the test data set. The accuracy score is just the proportion of correct predictions. It would appear that tweets from BoredElonMusk are easier to classify, with 234 correct predictions out of 258. There were 206 correct predictions on tweets from Elon Musk out of 242.

The accuracy score is 0.88, which isn't bad if you just want anything better than a coin flip. I'm sure there are a bunch of rabid data scientists out there who hate this measure. Note to self: read this later.

from sklearn.metrics import confusion_matrix

from sklearn.utils.multiclass import unique_labels

labels = model.classes_

mat = confusion_matrix(ytest, ypred)

sns.heatmap(mat, square=True, annot=True, cbar=False, cmap='Blues', fmt='g',

xticklabels=labels.tolist(), yticklabels=labels.tolist())

plt.xlabel('Predicted value')

plt.ylabel('True value');

# see fraction of predicted labels that match their true value

from sklearn.metrics import accuracy_score

accuracy_score(ytest, ypred)

And now for something completely different...¶

What would this post be without a word cloud!?!?

import sys

!{sys.executable} -m pip install wordcloud

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

bored_elon = data_df[data_df['Screen Name'] == 'BoredElonMusk']

elon = data_df[data_df['Screen Name'] == 'elonmusk']

stop_words = ['BoredElonMusk', 'elonmusk', 'youre'] + list(STOPWORDS)

# Create and generate a word cloud image:

wordcloud = WordCloud(max_font_size=50, max_words=100, background_color="white",

stopwords=stop_words).generate(' '.join(elon['tweet_cleaned']))

# Display the generated image:

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()

It would appear that real Elon Musk talks a lot about Tesla. That probably bodes well for his shareholders. Focus, right? Bored Elon Musk seems to care about services and apps.

# Create and generate a word cloud image:

wordcloud = WordCloud(max_font_size=50, max_words=100, background_color="white",

stopwords=stop_words).generate(' '.join(bored_elon['tweet_cleaned']))

# Display the generated image:

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()